This is the multi-page printable view of this section. Click here to print.

Addons

1 - Container Object Storage Interface (COSI)

By leveraging CAPI cluster lifecycle hooks, this handler deploys Container Object Storage Interface (COSI)

on the new cluster at the AfterControlPlaneInitialized phase.

Deployment of COSI is opt-in via the provider-specific cluster configuration.

The hook uses the Cluster API Add-on Provider for Helm to deploy the COSI resources.

Example

To enable deployment of COSI on a cluster, specify the following values:

apiVersion: cluster.x-k8s.io/v1beta1

kind: Cluster

metadata:

name: <NAME>

spec:

topology:

variables:

- name: clusterConfig

value:

addons:

cosi: {}

2 - Cluster Autoscaler

By leveraging CAPI cluster lifecycle hooks, this handler deploys Cluster Autoscaler on the management cluster

for every Cluster at the AfterControlPlaneInitialized phase.Unlike other addons, the Cluster Autoscaler

is deployed on the management cluster because it also interacts with the CAPI resources to scale the number of Machines.

The Cluster Autoscaler Pod will not start on the management cluster until the CAPI resources are pivoted

to that management cluster.

Note the Cluster Autoscale controller needs to be running for any scaling operations to occur, just updating the min and max size annotations in the Cluster object will not be enough. You can however manually change the number of replicas by modifying the MachineDeployment object directly.

Deployment of Cluster Autoscaler is opt-in via the provider-specific cluster configuration.

The hook uses either the Cluster API Add-on Provider for Helm or ClusterResourceSet to deploy the cluster-autoscaler

resources depending on the selected deployment strategy.

Example

To enable deployment of Cluster Autoscaler on a cluster, specify the following values:

apiVersion: cluster.x-k8s.io/v1beta1

kind: Cluster

metadata:

name: <NAME>

spec:

topology:

variables:

- name: clusterConfig

value:

addons:

clusterAutoscaler:

strategy: HelmAddon

workers:

machineDeployments:

- class: default-worker

metadata:

annotations:

# Set the following annotations to configure the Cluster Autoscaler

# The initial MachineDeployment will have 1 Machine

cluster.x-k8s.io/cluster-api-autoscaler-node-group-max-size: "3"

cluster.x-k8s.io/cluster-api-autoscaler-node-group-min-size: "1"

name: md-0

# Do not set the replicas field, otherwise the topology controller will revert back the autoscaler's changes

To deploy the addon via ClusterResourceSet replace the value of strategy with ClusterResourceSet.

Scale from zero

Required Cluster labels

CAREN support for scale from zero currently relies on specific labels on the Cluster resource in order for Cluster

Autoscaler RBAC to be correctly configured. Ensure that your Clusters have the appropriate cluster.x-k8s.io/provider

label as follows:

- CAPA:

cluster.x-k8s.io/provider: aws - CAPX:

cluster.x-k8s.io/provider: nutanix - CAPD:

cluster.x-k8s.io/provider: docker

CAREN deploys Cluster Autoscaler with appropriate permissions to enable scaling nodepools from zero. However, CAPI providers must implement functionality as described in the autoscaling from zero proposal in order for scaling from zero to be possible.

For those providers that have not implemented this (e.g. Docker, Nutanix), scaling from zero is still possible by

providing annotations on the MachineDeployments to allow Cluster Autoscaler to make appropriate scaling decisions.

The following example shows the required annotations to add to:

apiVersion: cluster.x-k8s.io/v1beta1

kind: Cluster

metadata:

name: <NAME>

labels:

cluster.x-k8s.io/provider: <PROVIDER>

spec:

topology:

variables:

- name: clusterConfig

value:

addons:

clusterAutoscaler:

strategy: HelmAddon

workers:

machineDeployments:

- class: default-worker

metadata:

annotations:

# Set the following annotations to configure the Cluster Autoscaler

# The initial MachineDeployment will have 0 Machines

cluster.x-k8s.io/cluster-api-autoscaler-node-group-max-size: "3"

cluster.x-k8s.io/cluster-api-autoscaler-node-group-min-size: "0"

capacity.cluster-autoscaler.kubernetes.io/cpu: "8"

capacity.cluster-autoscaler.kubernetes.io/memory: "8112564Ki"

name: scale-from-zero-example

# Do not set the replicas field, otherwise the topology controller will revert back the autoscaler's changes

If the nodepool is labelled and/or tainted, additional annotations are required in order for Cluster Autoscaler to take these labels and taints into account to scale nodepools that have node affinity and/or tolerations configured:

apiVersion: cluster.x-k8s.io/v1beta1

kind: Cluster

metadata:

name: <NAME>

labels:

cluster.x-k8s.io/provider: <PROVIDER>

spec:

topology:

variables:

- name: clusterConfig

value:

addons:

clusterAutoscaler:

strategy: HelmAddon

workers:

machineDeployments:

- class: default-worker

metadata:

annotations:

# Set the following annotations to configure the Cluster Autoscaler

# The initial MachineDeployment will have 0 Machines

cluster.x-k8s.io/cluster-api-autoscaler-node-group-max-size: "3"

cluster.x-k8s.io/cluster-api-autoscaler-node-group-min-size: "0"

capacity.cluster-autoscaler.kubernetes.io/cpu: "8"

capacity.cluster-autoscaler.kubernetes.io/memory: "8112564Ki"

capacity.cluster-autoscaler.kubernetes.io/labels: "node-restriction.kubernetes.io/my-app="

capacity.cluster-autoscaler.kubernetes.io/taints: "mytaint=tainted:NoSchedule"

name: scale-from-zero-example

# Do not set the replicas field, otherwise the topology controller will revert back the autoscaler's changes

3 - CNI

When deploying a cluster with CAPI, deployment and configuration of CNI is up to the user. By leveraging CAPI cluster

lifecycle hooks, this handler deploys a requested CNI provider on the new cluster at the AfterControlPlaneInitialized

phase.

The hook uses either the Cluster API Add-on Provider for Helm or ClusterResourceSet to deploy the CNI resources

depending on the selected deployment strategy.

Currently the hook supports Cilium and Calico CNI providers.

Cilium

Deployment of Cilium is opt-in via the provider-specific cluster configuration.

Cilium Example

To enable deployment of Cilium on a cluster, specify the following values:

apiVersion: cluster.x-k8s.io/v1beta1

kind: Cluster

metadata:

name: <NAME>

spec:

topology:

variables:

- name: clusterConfig

value:

addons:

cni:

provider: Cilium

strategy: HelmAddon

Cilium Example With Custom Values

To enable deployment of Cilium on a cluster with custom helm values, specify the following:

apiVersion: cluster.x-k8s.io/v1beta1

kind: Cluster

metadata:

name: <NAME>

spec:

topology:

variables:

- name: clusterConfig

value:

addons:

cni:

provider: Cilium

strategy: HelmAddon

values:

sourceRef:

name: <NAME> #name of ConfigMap present in same namespace

kind: <ConfigMap>

NOTE: Only ConfigMap kind objects will be allowed to refer helm values from.

ConfigMap Format:

apiVersion: v1

data:

values.yaml: |-

cni:

chainingMode: portmap

exclusive: false

ipam:

mode: kubernetes

kind: ConfigMap

metadata:

name: <CLUSTER_NAME>-cilium-cni-helm-values-template

namespace: <CLUSTER_NAMESPACE>

NOTE: ConfigMap should contain complete helm values for Cilium as same will be applied to Cilium helm chart as it is.

Default Cilium Specification

Please check the default Cilium configuration.

Select Deployment Strategy

To deploy the addon via ClusterResourceSet replace the value of strategy with ClusterResourceSet.

Calico

Deployment of Calico is opt-in via the provider-specific cluster configuration.

Calico Example

To enable deployment of Calico on a cluster, specify the following values:

apiVersion: cluster.x-k8s.io/v1beta1

kind: Cluster

metadata:

name: <NAME>

spec:

topology:

variables:

- name: clusterConfig

value:

addons:

cni:

provider: Calico

strategy: HelmAddon

ClusterResourceSet strategy

To deploy the addon via ClusterResourceSet replace the value of strategy with ClusterResourceSet.

When using the ClusterResourceSet strategy, the hook creates two ClusterResourceSets: one to deploy the Tigera

Operator, and one to deploy Calico via the Tigera Installation CRD. The Tigera Operator CRS is shared between all

clusters in the operator, whereas the Calico installation CRS is unique per cluster.

As ClusterResourceSets must exist in the same name as the cluster they apply to, the lifecycle hook copies default ConfigMaps from the same namespace as the CAPI runtime extensions hook pod is running in. This enables users to configure defaults specific for their environment rather than compiling the defaults into the binary.

The Helm chart comes with default configurations for the Calico Installation CRS per supported provider, but overriding is possible. For example. to change Docker provider's Calico configuration, specify following helm argument when deploying cluster-api-runtime-extensions-nutanix chart:

--set-file hooks.cni.calico.crsStrategy.defaultInstallationConfigMaps.DockerCluster.configMap.content=<file>

4 - Node Feature Discovery

By leveraging CAPI cluster lifecycle hooks, this handler deploys Node Feature Discovery (NFD) on the new cluster at

the AfterControlPlaneInitialized phase.

Deployment of NFD is opt-in via the provider-specific cluster configuration.

The hook uses either the Cluster API Add-on Provider for Helm or ClusterResourceSet to deploy the NFD resources

depending on the selected deployment strategy.

Example

To enable deployment of NFD on a cluster, specify the following values:

apiVersion: cluster.x-k8s.io/v1beta1

kind: Cluster

metadata:

name: <NAME>

spec:

topology:

variables:

- name: clusterConfig

value:

addons:

nfd:

strategy: HelmAddon

To deploy the addon via ClusterResourceSet replace the value of strategy with ClusterResourceSet.

5 - Registry

By leveraging CAPI cluster lifecycle hooks, this handler deploys an OCI Distribution registry,

at the AfterControlPlaneInitialized phase and configures it as a mirror on the new cluster.

The registry will be deployed as a StatefulSet with a persistent volume claim for storage

and multiple replicas for high availability.

A sidecar container in each Pod running Regsync will periodically sync the OCI artifacts across all replicas.

Deployment of this registry is opt-in via the provider-specific cluster configuration.

The hook will use the Cluster API Add-on Provider for Helm to deploy the registry resources.

Example

To enable deployment of the registry on a cluster, specify the following values:

apiVersion: cluster.x-k8s.io/v1beta1

kind: Cluster

metadata:

name: <NAME>

spec:

topology:

variables:

- name: clusterConfig

value:

addons:

registry: {}

Registry in the workload cluster

When the registry is enabled in the management cluster, it can also be automatically enabled in the workload cluster. To enable this behavior, set the following feature gate on the controller:

--feature-gates=AutoEnableWorkloadClusterRegistry=true

It is also possible to disable this behavior by setting the following annotation on the Cluster resource:

annotations:

caren.nutanix.com/skip-auto-enabling-workload-cluster-registry: "true"

All images pushed to the management cluster's registry can be automatically synced to the workload cluster's registry. To enable this behavior, set the following feature gate on the controller:

--feature-gates=SynchronizeWorkloadClusterRegistry=true

It is also possible to disable this behavior by setting the following annotation on the Cluster resource:

annotations:

caren.nutanix.com/skip-synchronizing-workload-cluster-registry: "true"

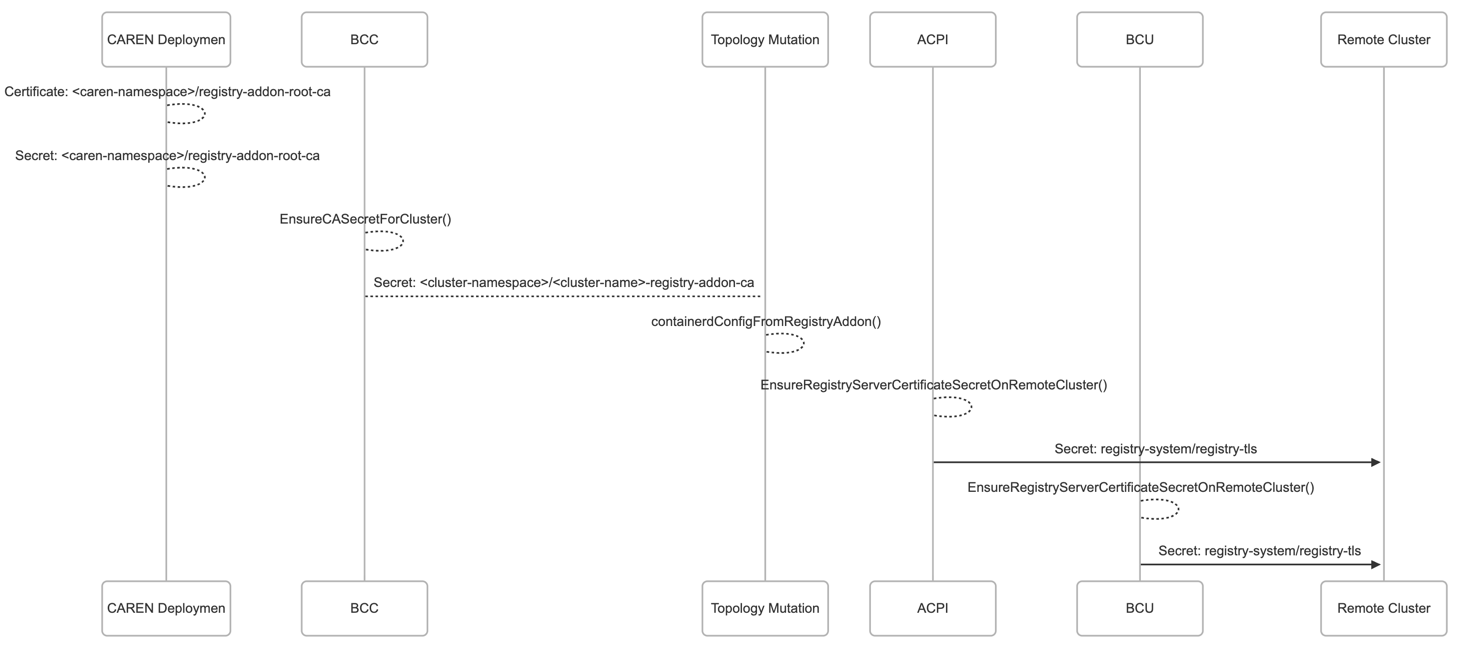

Registry Certificate

- A root CA Certificate is deployed in the provider's namespace.

- cert-manager generates a 10-year self-signed root Certificate

and creates a Secret

registry-addon-root-cain the provider's namespace. - BCC handler copies

ca.crtfrom theregistry-addon-root-caSecret to a new cluster Secret<cluster-name>-registry-addon-ca. A client pushing to the registry can use either the root CA Secret or the cluster Secret to trust the registry. - The cluster CA Secret contents (

ca.crt) is written out as files on the Nodes and used by Containerd to trust the registry addon. - During the initial cluster creation, the ACPI handler uses the root CA to create a new 2-year server certificate

for the registry and creates a Secret

registry-tlson the remote cluster. - During cluster upgrades, the BCU handler renews the server certificate

and updates the Secret

registry-tlson the remote cluster with the new certificate. It is expected that clusters will be upgraded at least once every 2 years to avoid certificate expiration.

6 - Service LoadBalancer

When an application running in a cluster needs to be exposed outside of the cluster, one option is

to use an external load balancer, by creating a Kubernetes Service of the

LoadBalancer type.

The Service Load Balancer is the component that backs this Kubernetes Service, either by creating a Virtual IP, creating a machine that runs load balancer software, by delegating to APIs, such as the underlying infrastructure, or a hardware load balancer.

The Service Load Balancer can choose the Virtual IP from a pre-defined address range. You can use CAREN to configure one or more IPv4 ranges. For additional options, configure the Service Load Balancer yourself after it is deployed.

CAREN currently supports the following Service Load Balancers:

Examples

To enable deployment of MetalLB on a cluster, specify the following values:

apiVersion: cluster.x-k8s.io/v1beta1

kind: Cluster

metadata:

name: <NAME>

spec:

topology:

variables:

- name: clusterConfig

value:

addons:

serviceLoadBalancer:

provider: MetalLB

To enable MetalLB, and configure two address IPv4 ranges, specify the following values:

apiVersion: cluster.x-k8s.io/v1beta1

kind: Cluster

metadata:

name: <NAME>

spec:

topology:

variables:

- name: clusterConfig

value:

addons:

serviceLoadBalancer:

provider: MetalLB

configuration:

addressRanges:

- start: 10.100.1.1

end: 10.100.1.20

- start: 10.100.1.51

end: 10.100.1.70

See MetalLB documentation for more configuration details.